Productivity

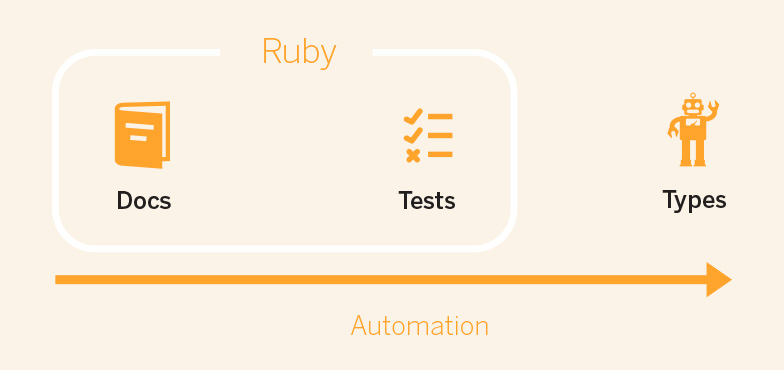

Ruby developers often wax enthusiastic about the speed and agility with which they are able to write programs, and have relied on two techniques more than any other to support this: tests and documentation.

After spending some time looking into other languages and language communities, it’s my belief that as Ruby developers, we are missing out on a third crucial tool that can extend our design capabilities, giving us richer tools with which to reason about our programs. This tool is a rich type system.

To be clear, I am in no way saying that tests and documentation do not have value, nor am I saying that the addition of a modern type system to Ruby is necessary for a certain class of applications to succeed – the number of successful businesses started with Ruby and Rails is proof enough. Rather, I am saying that a richer type system with a well designed type-checker could give our design several advantages that are hard to accomplish with tests and documentation alone:

- Truly executable documentation

Types declared for methods or fields are enforced by the type checker. Annotated classes are easy to parse by developers and documentation can be extracted from type annotations. - Stable specification

Tests which assert the input and return values of methods are brittle, raise confusing errors, and bloat test suites; documentation gets out of sync. Type annotations change with your implementation and can help maintain interface stability. - Meaningful error messages

Type checkers are valuable in part because they bridge the gap between the code and the meaning of a program. Error messages which inform you not only that you made a mistake, but how (and potentially how to fix it) are possible with the right tools. - Type driven design

Considering the design of a module of a program through its types can be an interesting exercise. With advancements in type checking and inference for dynamic programming languages, it may be possible to rely on these tools to help guide our program design.

Integrating traditional typing into a dynamic language like Ruby is inherently challenging. However, in searching for a way to integrate these design advantages into Ruby programs, I have come across a very interesting body of research about “gradual typing” systems. These systems exist to include, typically on a library level, the kinds of type checking and inference functionality that would allow Ruby developers to benefit from typing without the expected overhead. [1]

In doing this research I was pleasantly surprised to find that four researchers from the University of Maryland’s Department of Computer Science have designed such a system for Ruby, and have published a paper summarizing their work. It is presented as “The Ruby Type Checker” which they describe as “…a tool that adds type checking to Ruby, an object-oriented, dynamic scripting language.” [2] Awesome, let’s take a look at it!

The Ruby Type Checker

The implementation of the Ruby Type Checker (rtc) is described by the authors as “a Ruby library in which all type checking occurs at run time; thus it checks types later than a purely static system, but earlier than a traditional dynamic type system.” So right away we see that this tool isn’t meant to change the principal means of development relied on by Ruby developers, but rather to augment it. This is similar to how we think about Code Climate – as a tool which brings information about problems in your code earlier in your process.

What else can it do? A little more from the abstract:

“Rtc supports type annotations on classes, methods, and objects and rtc provides a rich type language that includes union and intersection types, higher- order (block) types, and parametric polymorphism among other features.”

Awesome. Reading a bit more into the paper we see that rtc operates by two main mechanisms:

- Compiling field and method annotations to a data structure that is later used for checks

- Optionally proxying calls through a system that gathers type information, allowing type errors to be raised on method entry and exit

So now let’s see how these mechanisms might be used in practice. We’ll walk through the ways that you can annotate the type of a class’s fields, and show what method type declarations look like.

First, field annotations on a class look like this:

class Foo typesig('@title: String') attr_accessor :title end

And method annotations should look familiar to you if you’ve seen type declarations for methods in other languages:

class Foo typesig("self.build: (Hash) -> Post") def self.build(attrs) # ... method definition end end

Where the input type appears in parens, and then the return type appears after the -> arrow that represents function application.

Similar to the work in typed Clojure and typed Racket (two of the more well-developed ‘gradual’ type systems), rtc is available as a library and can be used or not used a la carte. This flexibility is fantastic for Ruby developers. It means that we can isolate parts of our programs which might be amenable to type-driven design, and selectively apply the kinds of run time guarantees that type systems can give us, without having to go whole hog. Again, we don’t have to change the entire way we work, but we might augment our tools with just a little bit more.

How Would We Use Gradual Typing?

Asking the following question on Twitter got me A LOT of opinions, perhaps unsurprisingly:

What are the canonical moments of “Damn, I wish I had types here?” in a dynamic language?— mrb (@mrb_bk) April 29, 2014

The answers ranged from “never” to “always” to more thoughtful responses such as “during refactoring” or “when dealing with data from the outside world.” The latter sounded like a use case to me, so I started daydreaming about what a type checked model in a Rails application would look like, especially one that was primarily accessed through a controller that serves a JSON API.

Let’s look at a Post class:

class Post include PersistenceLogic attr_accessor :id attr_accessor :title attr_accessor :timestamp end

This post class includes some PersistanceLogic so that you can write:

Post.create({id: "foo", title: "bar", timestamp: 1398822693})

And be happy with yourself, secure that your data is persisted. To wire this up to the outside world, now imagine that this class is hooked up via a PostsController:

class PostsController def create Post.create(params[:post]) end end

Let’s assume that we don’t need to be concerned about security here (though that’s something that a richer type system can potentially help us with as well). This PostsController accepts some JSON:

{ "post": { "id": "0f0abd00", "title": "Cool Story", "timestamp": "1398822693" } }

And instead of having to write a bunch of boilerplate code around how to handle timestamp coming in as a string, or title not being present, etc. you could just write:

class Post rtc_annotated include PersistenceLogic typesig('@id: String') attr_accessor :id typesig('@title: String') attr_accessor :title typesig('@timestamp: Fixnum') attr_accessor :timestamp end

Which might lead you to want a type-checked build method (rtc_annotatetriggers type checking on a specific object instance):

class Post rtc_annotated include PersistenceLogic typesig('@id: String') attr_accessor :id typesig('@title: String') attr_accessor :title typesig('@timestamp: Fixnum') attr_accessor :timestamp typesig("self.build: (Hash) -> Post") def self.build(attrs) post = new.rtc_annotate("Post") post.id = attrs.delete(:id) post.title = attrs.delete(:title) post.timestamp = attrs.delete(:timestamp) end end

But, oops! When you run it you see that you didn’t write that correctly:

[2] pry(main)> Post.build({id: "0f0abd00", title: "Cool Story", timestamp: 1398822693}) Rtc::TypeMismatchException: invalid return type in build, expected Post, got Fixnum

You can fix that:

class Post rtc_annotated include PersistenceLogic typesig('@id: String') attr_accessor :id typesig('@title: String') attr_accessor :title typesig('@timestamp: Fixnum') attr_accessor :timestamp typesig("self.build: (Hash) -> Post") def self.build(attrs) post = new.rtc_annotate("Post") post.id = attrs.delete(:id) post.title = attrs.delete(:title) post.timestamp = attrs.delete(:timestamp) post end end

Okay let’s run it with that test JSON:

Post.build({ id: "0f0abd00", title: "Cool Story", timestamp: "1398822693" })

Whoah, whoops!

Rtc::TypeMismatchException: In method timestamp=, annotated types are [Rtc::Types::ProceduralType(10): [ (Fixnum) -> Fixnum ]], but actual arguments are ["1398822693"], with argument types [NominalType(1)<String>] for class Post

Ah, there ya go:

class Post rtc_annotated include PersistenceLogic typesig('@id: String') attr_accessor :id typesig('@title: String') attr_accessor :title typesig('@timestamp: Fixnum') attr_accessor :timestamp typesig("self.build: (Hash) -> Post") def self.build(attrs) post = new.rtc_annotate("Post") post.id = attrs.delete(:id) post.title = attrs.delete(:title) post.timestamp = attrs.delete(:timestamp).to_i post end end

So then you could say:

Post.build({ id: "0f0abd00", title: "Cool Story", timestamp: "1398822693" }).save

And be type-checked, guaranteed, and on your way.

Just a Taste

The idea behind this blog post was to get Ruby developers thinking about some of the advantages of using a sophisticated type checker that could programmatically enforce the kinds of specifications that are currently leveraged by documentation and tests. Through all of the debate about how much we should be testing and what we should be testing, we have been potentially overlooking another very sophisticated set of tools which can help augment our designs and guarantee the soundness of our programs over time.

The Ruby Type Checker alone will not give us all of the tools that we need, but it gives us a taste of what is possible with more focused attention on types from the implementors and users of the language.

Works Cited

[1] Gradual typing bibliography

[2] The ruby type checker [pdf]

Editor’s Note: Our post today is from Peter Bell. Peter Bell is Founder and CTO of Speak Geek, a contract member of the GitHub training team, and trains and consults regularly on everything from JavaScript and Ruby development to devOps and NoSQL data stores.

When you start a new project, automated tests are a wonderful thing. You can run your comprehensive test suite in a couple of minutes and have real confidence when refactoring, knowing that your code has really good test coverage.

However, as you add more tests over time, the test suite invariably slows. And as it slows, it actually becomes less valuable — not more. Sure, it’s great to have good test coverage, but if your tests take more than about 5 minutes to run, your developers either won’t run them often, or will waste lots of time waiting for them to complete. By the time tests hit fifteen minutes, most devs will probably just rely on a CI server to let them know if they’ve broken the build. If your test suite exceeds half an hour, you’re probably going to have to break out your tests into levels and run them sequentially based on risk – making it more complex to manage and maintain, and substantially increasing the time between creating and noticing bugs, hampering flow for your developers and increasing debugging costs.

The question then is how to speed up your test suite. There are a several ways to approach the problem. A good starting point is to give your test suite a spring clean. Reduce the number of tests by rewriting those specific to particular stories as “user journeys.” A complex, multi-page registration feature might be broken down into a bunch of smaller user stories while being developed, but once it’s done you should be able to remove lots of the story-specific acceptance tests, replacing them with a handful of high level smoke tests for the entire registration flow, adding in some faster unit tests where required to keep the comprehensiveness of the test suite.

In general it’s also worth looking at your acceptance tests and seeing how many of them could be tested at a lower level without having to spin up the entire app, including the user interface and the database.

Consider breaking out your model logic and treating your active record models as lightweight Data Access Objects. One of my original concerns when moving to Rails was the coupling of data access and model logic and it’s nice to see a trend towards separating logic from database access. A great side effect is a huge improvement in the speed of your “unit” tests as, instead of being integration tests which depend on the database, they really will just test the functionality in the methods you’re writing.

It’s also worth thinking more generally about exactly what is being spun up every time you run a particular test. Do you really need to connect to an internal API or could you just stub or mock it out? Do you really need to create a complex hairball of properly configured objects to test a method or could you make your methods more functional, passing more information in explicitly rather than depending so heavily on local state? Moving to a more functional style of coding can simplify and speed up your tests while also making it easier to reason about your code and to refactor it over time.

Finally, it’s also worth looking for quick wins that allow you to run the tests you have more quickly. Spin up a bigger instance on EC2 or buy a faster test box and make sure to parallelize your tests so they can leverage multiple cores on developers laptops and, if necessary, run across multiple machines for CI.

If you want to ensure your tests are run frequently, you’ve got to keep them easy and fast to run. Hopefully, by using some of the practices above, you’ll be able to keep your tests fast enough that there’s no reason for your dev team not to run them regularly.

Editor’s Note: Today we have a guest post from Marko Anastasov. Marko is a developer and cofounder of Semaphore, a continuous integration and deployment service, and one of Code Climate’s CI partners.

"The act of writing a unit test is more an act of design than of verification." - Bob Martin

A still common misconception is that test-driven development (TDD) is about testing; that by adhering to TDD you can minimize the probability of going astray and forgetting to write tests by mandating that is the first thing we need to do. While I’d pick a solution that’s designed for mere mortals over one that assumes we are superhuman any day, the case here is a bit different. TDD is designed to make us think about our code before writing it, using automated tests as a vehicle — which is, by the way, so much better than firing up the debugger to make sure that every code connected to a certain feature is working as expected. The goal of TDD is better software design. Tests are a byproduct.

Through the act of writing a test first, we ponder on the interface of the object under test, as well as of other objects that we need but that do not yet exist. We work in small, controllable increments. We do not stop the first time the test passes. We then go back to the implementation and refactor the code to keep it clean, confident that we can change it any way we like because we have a test suite to tell us if the code is still correct.

Anyone who’s been doing this has found their code design skills challenged and sharpened. Questions like agh maybe that private code shouldn’t be private or is this class now doing too much are constantly flying through your mind.

Test-driven refactoring

The red-green-refactor cycle may come to a halt when you find yourself in a situation where you don’t know how to write a test for some piece of code, or you do, but it feels like a lot of hard work. Pain in testing often reveals a problem in code design, or simply that you’ve come across a piece of code that was not written with the TDD approach. Some smells in test code are frequent enough to be called an anti-pattern and can identify an opportunity to refactor, both test and application code.

Take, for example, a complex test setup in a Rails controller spec.

describe VenuesController do let(:leaderboard) { mock_model(Leaderboard) } let(:leaderboard_decorator) { double(LeaderboardDecorator) } let(:venue) { mock_model(Venue) } describe "GET show" do before do Venue.stub_chain(:enabled, :find) { venue } venue.stub(:last_leaderboard) { leaderboard } LeaderboardDecorator.stub(:new) { leaderboard_decorator } end it "finds venue by id and assigns it to @venue" do get :show, :id => 1 assigns[:venue].should eql(venue) end it "initializes @leaderboard" do get :show, :id => 1 assigns[:leaderboard].should == leaderboard_decorator end context "user is logged in as patron" do include_context "patron is logged in" context "patron is not in top 10" do before do leaderboard_decorator.stub(:include?).and_return(false) end it "gets patron stats from leaderboard" do patron_stats = double leaderboard_decorator.should_receive(:patron_stats).and_return(patron_stats) get :show, :id => 1 assigns[:patron_stats].should eql(patron_stats) end end end # one more case omitted for brevity end end

The controller action is technically not very long:

class VenuesController < ApplicationController def show begin @venue = Venue.enabled.find(params[:id]) @leaderboard = LeaderboardDecorator.new(@venue.last_leaderboard) if logged_in? and is_patron? and @leaderboard.present? and not @leaderboard.include?(@current_user) @patron_stats = @leaderboard.patron_stats(@current_user) end end end end

Notice how the extensive spec setup code basically led the developers to forget to write expectations that Venue.enabled.find is called, or LeaderboardDecorator.new is given a correct argument, for example. It is not clear if the assigned @leaderboard comes from the assigned venue at all.

Trapped in the MVC paradigm, the developers (myself included) were adding up some deep business logic in the controller, making it hard to write a good spec and thus maintain both of them. The difficulty comes from the fact that even a one-line Rails controller method does many things:

def show @venue = Venue.find(params[:id]) end

That method is:

- extracting parameters;

- calling an application-specific method;

- assigning a variable to be used in the view template; and

- rendering a response template.

Adding code that reaches deep inside the database and business rules can only turn a controller method into a mess.

The controller above includes one if statement with four conditions. A full spec, then, should include 15 combinations just for this one part of code. Of course they were not written. But things could be different, if this code was outside the controller.

Let’s try to imagine what a better version of the controller spec would look like, and what interfaces it would prefer to work with in order to carry its’ job of processing the incoming request and preparing a response.

describe VenuesController do let(:venue) { mock_model(Venue) } describe "GET show" do before do Venue.stub(:find_enabled) { venue } venue.stub(:last_leaderboard) end it "finds the enabled venue by given id" do Venue.should_receive(:find_enabled).with(1) get :show, :id => 1 end it "assigns the found @venue" do get :show, :id => 1 assigns[:venue].should eql(venue) end it "decorates the venue's leaderboard" do leaderboard = double venue.stub(:last_leaderboard) { leaderboard } LeaderboardDecorator.should_receive(:new).with(leaderboard) get :show, :id => 1 end it "assigns the @leaderboard" do decorated_leaderboard = double LeaderboardDecorator.stub(:new) { decorated_leaderboard } get :show, :id => 1 assigns[:leaderboard].should eql(decorated_leaderboard) end end end

Where did all the other code go? We’re simplifying the find logic by extending the model:

describe Venue do describe ".find_enabled" do before do @enabled_venue = create(:venue, :enabled => true) create(:venue, :enabled => true) create(:venue, :enabled => false) end it "finds within the enabled scope" do Venue.find_enabled(@enabled_venue.id).should eql(@enabled_venue) end end end

The various if statements can be simplified as follows:

if logged_in?– variations on this can be decided in the view template;if @leaderboard.present?– obsolete, the view can decide what to do if it is not;- The rest can be moved to the decorator class under a new, more descriptive method.

describe LeaderboardDecorator do describe "#includes_patron?" do context "user is not a patron" { } context "user is a patron" do context "user is on the list" { } context "user is NOT on the list" { } end end end

This new method will help the view decide whether or not to render @leaderboard.patron_stats, which we do not need to change:

# app/views/venues/show.html.erb <%= render "venues/show/leaderboard" if @leaderboard.present? %> # app/views/venues/show/_leaderboard.html.erb <% if @leaderboard.includes_patron?(@current_user) -%> <%= render "venues/show/patron_stats" %> <% end -%>

The resulting controller method is now fairly simple:

def show @venue = Venue.find_enabled(params[:id]) @leaderboard = LeaderboardDecorator.new(@venue.last_leaderboard) end

The next time we work with this code, we might be annoyed that controller needs to know what is the right argument to give to a LeaderboardDecorator. We could introduce a new decorator for venues that will have a method that returns a decorated leaderboard. The implementation of that step is left as an exercise for the reader.

Editor’s Note: Today we have a guest post from Oren Dobzinski. Oren is a code quality evangelist, actively involved in writing and educating developers about maintainable code. He blogs about how to improve code quality at re-factor.com.

It’s the beginning of the project. You already have a rough idea of the architecture you’re going to build and you know the requirements. If you’re like me you’ll want to just start coding, but you hold yourself back, knowing that you should really start with an acceptance test.

Unfortunately, it’s not that simple. Your system needs to talk to a datastore or two, communicate with a couple internal services, and maybe an external service as well. Since it’s hard to build both the infrastructure and the business logic at the same time you make a few assumptions in your test and stub out these dependencies, adding them to your TODO list.

A couple of weeks pass, the deadline is getting close, and you come back to your list. But while working on the integration you find out that it’s really a pain to setup one of the datastores, and that there are a few security-related issues with the external service you need to sort out with the in-house security team. You also discover that the behavior of the external service is not what you expected. Maybe the service is slower than you anticipated, or requires multiple requests that weren’t well-documented or just because you don’t have a premium account. Oh, and you left the deployment scripts for the end so now you need to start cranking on that.

Naturally, it’s more complicated than you originally thought. At this point you’re deep in crunch mode and realize you might not hit the deadline because of the additional work you’ve just discovered and the need to wait for other teams for their input.

Deploy A Walking Skeleton First

In order to reduce risks on projects like the above you need to figure out all the unknowns as early as possible. The best way to do this is to have a real end-to-end test with no stubs against a system that’s deployed in production. Enter the Walking Skeleton: a “tiny implementation of the system that performs a small end-to-end function. It need not use the final architecture, but it should link together the main architectural components. The architecture and the functionality can then evolve in parallel.” – Alistair Cockburn. It is discussed extensively in the excellent GOOS book.[1]

If the system needs to talk to one or more datastores then the walking skeleton should perform a simple query against each of them, as well as simple requests against any external or internal service. If it needs to output something to the screen, insert an item to a queue or create a file, you need to exercise these in the simplest possible way. As part of building it you should write your deployment and build scripts, setup the project, including its tests, and make sure all the automations are in place — such as CI integration, monitoring and exception handling. The focus is the infrastructure, not the features. Only after you have your walking skeleton should you write your first acceptance test and begin the TDD cycle.

This is only the skeleton of the application, but the parts are connected and the skeleton does walk in the sense that it exercises all the system’s parts as you currently understand them. Because of this partial understanding, you must make the walking skeleton minimal. But it’s not a prototype and not a proof of concept — it’s production code, so you should definitely write tests as you work on it. These tests will assert things like “accepts a request”, “pushes some content to S3”, or “pushes an empty message to the queue”.

[1] A similar concept called “Tracer Bullets” was introduced in The Pragmatic Programmer.

Start with the Riskiest Task

According to Hofstadter’s Law, “it always takes longer than you expect, even when you take into account Hofstadter’s Law.” Amazingly, the law is always spot on. It makes sense then to work on the riskiest parts of the project first, which are usually the parts which have dependencies: on third party services, on in house services, on other groups in the organization you belong to. It makes sense to get the ball rolling with these groups simply because you don’t know how long it will take and what problems should arise.

Don’t Cut Corners

It’s important to stress that until the walking skeleton is deployed to production (possibly behind a feature flag or just hidden from the outside world) you are not ready to write the first acceptance test. You want to exercise your deployment and build scripts and discover as many potential problems as you can as early as possible.

The Walking Skeleton is a way to validate the design and get early feedback so that it can be improved. You will be missing this feedback if you cut corners or take shortcuts.

Kickstart the TDD process

You can also think about it as a way to start the TDD process. It can be daunting or just too much work to build the infrastructure along with the first acceptance test. Furthermore, changes in one may require changes in the other (it’s the “first-feature paradox” from GOOS). This is why you first work on the infrastructure and only then move on to work on the first feature.

Obstacles and Tradeoffs

By front-loading all infrastructure work you’re postponing the delivery of the first feature. Some managers might feel uncomfortable when this happens, as they expect very rapid pace at the beginning of the project. You might feel some pressure to cut corners. However, their confidence should increase when you deliver the walking skeleton and they have a real, albeit minimal, system to play with. Most hard problems in software development are communication problems, and this is no exception. You should explain how the walking skeleton will reduce unexpected delays at the end of the project.

The walking skeleton may not save you from the recursiveness of Hofstadter’s Law but it may make the last few days of the project a little more sane.

Editor’s Note: Today we have a guest post from Brandon Savage, an expert in crafting maintainable PHP applications. We invited Brandon to post on the blog to share some of his wisdom around bringing object-oriented design to PHP, a language with procedural roots.

One of the most common questions that PHP developers have about object-oriented programming is, “why should I bother?” Unlike languages such as Python and Ruby, where every string and array is an object, PHP is very similar to its C roots, and procedural programming is possible, if not encouraged.

Even though an object model exists in PHP, it’s not a requirement that developers use it. In fact, it’s possible to build great applications (see WordPress) without object-orientation at all.

So why bother?

There are five good reasons why object-oriented PHP applications make sense, and why you should care about writing your applications in an object-oriented style.

1. Objects are extensible.

It should be possible to extend the behavior of objects through both composition and inheritance, allowing objects to take on new life and usefulness in new settings.

Of course, developers have to be careful when extending existing objects, since changing the public API of an object creates a whole new object type. But, if done well, developers can revitalize old libraries through the power of inheritance.

2. Objects are replaceable.

The whole point of object-oriented development is to make it easy to swap objects out for one another. The Liskov Substitution Principle tells us that one object should be replaceable with another object of the same type and that the program should still work.

It can be hard to see the value in removing a component and replacing it with another component, especially early on in the development lifecycle. But the truth is that things change; needs, technologies, resources. There may come a point where you’ll need to incorporate a new technology, and having a well-designed object-oriented application will only make that easier.

3. Objects are testable.

It’s possible to test procedural applications, albeit not well. Most procedural applications don’t have an easy way to separate external components (like the file system, database, etc.) from the components under test. This means that under the best circumstances, testing a procedural application is more of an integration test than a unit test.

Object-oriented development makes unit testing far easier and more practical. Since you can easily mock and stub objects (see Mockery, a great mock object library), you can replace the objects you don’t need and test the ones you do. Since a unit test should be testing only one segment of code at a time, mock and stub objects make this possible.

4. Objects are maintainable.

There are a few problems with procedural code that make it more difficult to maintain. One is the likelihood of code duplication, that insidious parasite of unmaintainability. Object-oriented code, on the other hand, makes it easy for developers to put code in one place, and to create an expressive API that explains what the code is doing, even without having to know the underlying behavior.

Another problem that object-oriented programming solves is the fact that procedural code is often complicated. Multiple conditional statements and varying paths create code that is hard to follow. There’s a measure of complexity — cyclomatic complexity — that shows us the number of decision points in the code. A score greater than 12 is usually considered bad, but good object-oriented code will generally have a score under 6.

For example, if you know that a method accepts an object as one of its arguments, you don’t have to know anything about how that object works to meet the requirements. You don’t have to format that object, or manipulate the data to meet the needs of the method; instead, you can just pass the object along. You can further manipulate that object with confidence, knowing that the object will properly validate your inputs as valid or invalid, without you having to worry about it.

5. Objects produce catchable (and recoverable) errors.

Most procedural PHP developers are passingly familiar with throwing and catching exceptions. However, exceptions are intended to be used in object-oriented development, and they are best used as ways to recover from various error states.

Exceptions are catchable, meaning that they can be handled by our code. Unlike other mechanisms in PHP (like trigger_error()), we can decide how to handle an exception and determine if we can move forward (or terminate the application).

The Bottom Line

Object-oriented programming opens up a whole world of new possibilities for developers. From testing to extensibility, writing object-oriented PHP is superior to procedural development in almost every respect.

Automatically Validating Millions of Data Points

At Code Climate, we feel it’s critical to deliver dependable and accurate static analysis results. To do so, we employ a variety of quality assurance techniques, including unit tests, acceptance tests, manual testing and incremental rollouts. They all are valuable, but we still had too much risk of introducing hard-to-detect bugs. To fill the gap, we’ve added a new tactic to our arsenal: known good testing.

"Known good" testing refers to capturing the result of a process, and then comparing future runs against the saved or known good version to discover unexpected changes. For us, that means running full Code Climate analyses of a number of open source repos, and validating every aspect of the result. We only started doing this last week, but it’s already caught some hard-to-detect bugs that we otherwise may not have discovered until code hit production.

Why known good testing?

Known good testing is common when working with legacy code. Rather than trying to specify all of the logical paths through an untested module, you can feed it a varied set of inputs and turn the outputs into automatically verifying tests. There’s no guarantee the outputs are correct in this case, but at least you can be sure they don’t change (which, in some systems is even more important).

For us, given that we have a relatively reliable and comprehensive set of unit tests for our analysis code, the situation is a bit different. In short, we find known good testing valuable because of three key factors:

- The inputs and output from our analysis is extremely detailed. There are a huge number of syntactical structures in Ruby, and we derive a ton of information from them.

- Our analysis depends on external code that we do not control, but do want to update from time-to-time (e.g. RubyParser)

- We are extremely sensitive to any changes in results. For example, even a tiny variance in our detection of complex methods across the 20k repositories we analyze would ripple into changes of class ratings, resulting in incorrect metrics being delivered to our customers.

These add up to mean that traditional unit and acceptance testing is necessary but not sufficient. We use unit and acceptance tests to provide faster results and more localized detection of regressions, but we use our known good suite (nicknamed Krylon) to sanity check our results against a dozen or so repositories before deploying changes.

How to implement known good testing

The high level plan is pretty straightforward:

- Choose (or randomly generate, using a known seed) a set of inputs for your module or program.

- Run the inputs through a known-good version of the system, persisting the output.

- When testing a change, run the same inputs through the new version of the system and flag any output variation.

- For each variation, have a human determine whether or not the change is expected and desirable. If it is, update the persisted known good records.

The devil is in the details, of course. In particular, if the outputs of your system are non-trivial (in our case a set of MongoDB documents spanning multiple tables), persisting them was a little tricky. We could keep them in MongoDB, of course, but that would not make them as accessible to humans (and tools like diff and GitHub) as a plain-test format like JSON would. So I wrote a little bit of code to dump records out as JSON:

dir = "krylon/#{slug}"

repo_id = Repo.create!(url: "git://github.com/#{slug}")

run_analysis(repo_id)

FileUtils.mkdir_p(dir)

%w[smells constants etc.].each do |coll|

File.open("#{dir}/#{coll}.json", "w") do |f|

docs = db[coll].find(repo_id: repo_id).map do |doc|

round_floats(doc.except(*ignored_fields))

end

sorted_docs = JSON.parse(docs.sort_by(&:to_json).to_json)

f.puts JSON.pretty_generate(sorted_docs)

end

end

repo_id = Repo.create!(url: "git://github.com/#{slug}")

run_analysis(repo_id)

FileUtils.mkdir_p(dir)

%w[smells constants etc.].each do |coll|

File.open("#{dir}/#{coll}.json", "w") do |f|

docs = db[coll].find(repo_id: repo_id).map do |doc|

round_floats(doc.except(*ignored_fields))

end

sorted_docs = JSON.parse(docs.sort_by(&:to_json).to_json)

f.puts JSON.pretty_generate(sorted_docs)

end

end

Then there is the matter of comparing the results of a test run against the known good version. Ruby has a lot of built-in functionality that makes this relatively easy, but it took a few tries to get a harness set up properly. We ended up with something like this:

dir = "krylon/#{slug}"

repo_id = Repo.create!(url: "git://github.com/#{slug}")

run_analysis(repo_id)

%w[smells constants etc.].each do |coll|

actual_docs = db[coll].find(repo_id: repo_id).to_a

expected_docs = JSON.parse(File.read("#{dir}/#{coll}.json"))

actual_docs.each do |actual|

actual = JSON.parse(actual.to_json).except(*ignored_fields)

if (index = expected_docs.index(actual))

# Delete the match so it can only match one time

expected_docs.delete_at(index)

else

puts "Unable to find match:"

puts JSON.pretty_generate(JSON.parse(actual.to_json))

puts

puts "Expected:"

puts JSON.pretty_generate(JSON.parse(expected_docs.to_json))

raise

end

end

if expected_docs.empty?

puts " PASS #{coll} (#{actual_docs.count} docs)"

else

puts "Expected not empty after search. Remaining:"

puts JSON.pretty_generate(JSON.parse(expected_docs.to_json))

raise

end

end

repo_id = Repo.create!(url: "git://github.com/#{slug}")

run_analysis(repo_id)

%w[smells constants etc.].each do |coll|

actual_docs = db[coll].find(repo_id: repo_id).to_a

expected_docs = JSON.parse(File.read("#{dir}/#{coll}.json"))

actual_docs.each do |actual|

actual = JSON.parse(actual.to_json).except(*ignored_fields)

if (index = expected_docs.index(actual))

# Delete the match so it can only match one time

expected_docs.delete_at(index)

else

puts "Unable to find match:"

puts JSON.pretty_generate(JSON.parse(actual.to_json))

puts

puts "Expected:"

puts JSON.pretty_generate(JSON.parse(expected_docs.to_json))

raise

end

end

if expected_docs.empty?

puts " PASS #{coll} (#{actual_docs.count} docs)"

else

puts "Expected not empty after search. Remaining:"

puts JSON.pretty_generate(JSON.parse(expected_docs.to_json))

raise

end

end

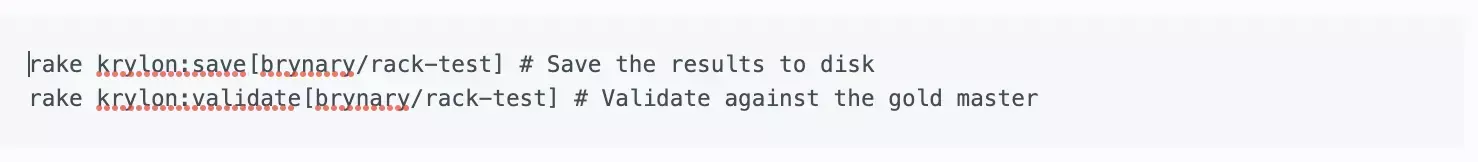

All of this is invoked by a couple Rake tasks:

Our CI system runs the rake krylon:validate task. If it fails, someone on the Code Climate team reviews the results, and either fixes an issue or uses rake krylon:save to update the known good version.

Gotchas

In building Krylon, we ran into a few issues. They were all pretty simple to fix, but I’ll list them here to hopefully save someone some time:

- Floats – Floating point numbers can not be reliably compared using the equality operator. We took the approach of rounding them to two decimal places, and that has been working so far.

- Timestamps – Columns like

created_at,updated_atwill vary every time your code runs. We just exclude them. - Record IDs – Same as above.

- Non-deterministic ordering of hash keys and arrays – This took a bit more time to track down. Sometimes Code Climate would generate hashes or arrays, but the order of those data structures was undefined and variable. We had two choices: update the Krylon validation code to allow this, or make them deterministic. We went with updating the production code to be deterministic with respect to order because it was simple.

Wrapping up

Known good testing is not a substitute for unit tests and acceptance tests. However, it can be a valuable tool in your toolbox for dealing with legacy systems, as well as certain specialized cases. It’s a fancy name, but implementing a basic system took less than a day and began yielding benefits right away. Like us, you can start with something simple and rough, and iterate it down the road.

Exercising Your Team’s Refactoring Muscles Together

When teams try to take control of their technical debt and improve the maintainability of their codebase over time, one problem that can crop up is a lack of refactoring experience. Teams are often composed of developers with a mix of experience levels (both overall and within the application domain) and stylistic preferences, making it difficult for well-intentioned contributors to effect positive change.

There are a variety of techniques to help in these cases, but one I’ve had success with is “Mob Refactoring”. It’s a variant of Mob Programming, which is like pair programming with more than two people (though still with one computer). This sounds crazy at first, and I certainly don’t recommend working like this all the time, but it can be very effective for leveling up the refactoring abilities of the team and establishing shared conventions for style and structure of code.

Here’s how it works:

- Assemble the team for an hour around a computer and a projector. It’s a great opportunity to order food and eat lunch together, of course.

- Bring an area of the codebase that is in need of refactoring. Have one person drive the computer, while the rest of the team navigates.

- Attempt to refactor the code as much as possible within the hour.

- Don’t expect to produce production-ready code during these sessions. When you’re done, throw out the changes. Do not try to finish the refactoring after the session – it’s an easy way to get lost in the weeds.

The idea is that the value of the exercise is in the conversations that will take place, not the resulting commits. Mob Refactoring sessions provide the opportunity for less experienced members of the team to ask questions like, “Why do we do this like that?”, or for more senior programmers to describe different implementation approaches that have been tried, and how they’ve worked out in the past. The discussions will help close the experience gap and often lead to a new consensus about the preferred way of doing things.

Do this a few times, and rotate the area of focus and the lead each week. Start with a controller, then work on a model, or perhaps a troublesome view. Give each member of the team a chance to select the code to be refactored and drive the session. Even the least experienced member of your team can pick a good project – and they’ll probably learn more while by working on a problem that is on the top of their mind.

If you have a team that wants to get better at refactoring, but experience and differing style patterns are a challenge, give Mob Refactoring a try. It requires little preparation, and only an hour of investment (although I would recommend trying it three times before judging the effect). If you give it a go, let me know how it went for you in the comments.

An Object Design Epiphany

Successful software projects are always changing. Every new requirement comes with the responsibility to determine exactly how the new or changed behaviors will be codified into the system, often in the form of objects.

For the longest time, when I had to change a behavior in a codebase I followed these rough steps:

- Locate the behavior in the application’s code, usually inside some class (hopefully just one)

- Determine how it needed to be adjusted in order to meet the new requirements

- Write one or more failing tests that the class must satisfy

- Update the class to satisfy the tests

Simple, right? Eventually I realized that this simple workflow leads to messy code.

The temptation when changing an existing system is to implement the desired behavior within the structure of the current abstractions. Repeat this without adjustment, and you’ll quickly end up contorting existing concepts or working around legacy behaviors. Conditionals pile up, and shotgun surgery becomes standard operating procedure.

One day, I had an epiphany. When making a change, rather than surveying the current landscape and asking “How can I make this work like I need?”, take a step back, look at each relevant abstraction and ask, “How should this work?”.

The names of the modules, classes and methods convey meaning. When you change the behavior within them in isolation, the cohesion between those names and the implementation beneath them may begin to fray.

If you are continually ensuring that your classes work exactly as their names imply, you’ll often find that the change in behavior you seek is better represented by adjusting the landscape of types in your system. You may end up introducing a collaborator, or you might simply need to tweak the name of a class to align with it’s new behavior.

This type of conscientiousness is difficult to apply rigorously, but like any habit it can be built up over time. Your reward will be a codebase maintainable years into the future.

Four Simple Guidelines to Help Get It Right

One struggle for software development teams is determining when it is appropriate to refactor. It is quite a quandary.

Refactor too early and you could over-abstract your design and slow down your team. YAGNI! You’ll also make sub-optimal design decisions because of the limited information you have.

On the other hand, if you wait too long to refactor, you can end up with a big ball of mud. The refactoring may have grown to be a Herculean effort, and all the while your team has been suffering from decreased productivity as they tiptoe around challenging code.

So what’s a pragmatic programmer to do? Let’s take a look at a concrete set of guidelines that can try to answer this question. Generally, take the time to refactor now if any of the following are true:

- Refactoring will speed up the task at hand. This is a no brainer, because you’ll get your current work done more quickly, and the refactoring you performed will benefit your team over time. Of course, this is a judgement call, but experienced developers will hone their sense for these cases.

- The refactoring is quick and contained. Many refactorings consist of making small changes, generally within a single class. Because you’re altering an encapsulated unit of structure, often these types of changes require no modifications to collaborators or unit tests. The most common example is applying the Extract Method refactoring in order to implement the Composed Method pattern, but other refactorings like Replace Temp with query fall into this category.Katrina Owen once said:

Small refactorings are like making a low cost investment that always pays dividends. Take advantage of that every time.

- Remember, source code is generally “Write once, read hundreds (or thousands) of times”. Spending a little extra time now cleaning up the internal structure of your classes makes them more approachable to every developer who opens that source code file in the future.

- The un-factored code has three strikes. Keep track (either in the back of your head or documented somewhere) of each time you run into a problem or friction that would have been avoided if your code had been better factored. When a given problem spot causes your team trouble three times, it’s time to clean it up. This allows you to focus on the issues that are actually slowing your team down, not just the areas that seem messy.

- You’re at risk of digging a hole that will take more than a day to fix. The worst code in every app is usually stuck in god objects that feel almost impossible to refactor, but it wasn’t always this way.A key practice to employ when considering a larger refactoring is asking yourself this question:

“If I pass on doing the refactoring now, how long would it take to do later?”

If it would take less than a day to perform later, there is less urgency to do it now. It means that if a change needs to be made later, you can be confident you won’t be stuck in the weeds for days on end to whip the code into a workable state in order to implement the feature or bug fix.

Conversely, if passing on the refactoring creates a risk of digging technical debt that would take more than a day to resolve, it should probably be dealt with immediately. If you wait, that day could become two, or three or four days. The longer the time a refactoring takes, the less likely it is to ever be performed.

So it’s important to limit the technical debt you carry to issues that can be resolved in short order if they need to be. Violate this guideline, and you increase the risk of having developers feel the need to spend days cleaning things up, a practice that is sure to (rightly) make your whole organization uneasy.